The car ran smoothly along a fairly intricate two-lane, two-way public road. There were uphill and downhill sections and corners along the way, but it ran with minimal acceleration and deceleration as if it had been calculated seamlessly. On the curved sections of road, (because it was driving on the left) the trajectory of the front left tire always carefully followed the edge of the road and continued along beautifully drawn arcs. If the road became wider, it turned outward, and if it became narrower, it turned inward. The speedometer swayed around the 60 km/h mark. I think it took about 30 minutes, during which time I didn’t sense any danger at all. A human driver was in the driver’s seat in case of an emergency, but they didn’t really have to do anything. The drive, performed by sensors and algorithms, was just as good as you would get from a seasoned professional.

***

The agenda for this third issue is “A: Autonomous”. For autonomous driving to come into practical use, there is a web of quite complex issues, not only “autonomous driving technology”, but also “systems and regulations” at national, governmental, and international levels, and “social acceptance” of its practical application. Therefore, I would like to explain these things in two parts. In this first half, I will explain “The beginning of autonomous driving” and “Autonomous driving: the technology”.

***

The beginning of autonomous driving

As previously mentioned in “Connected”, the idea of ”autonomous driving” has been around since before the Second World War, and after the war it was developed for practical use based on a method of sending a signal from the road to the car to control it. These tests by GM and RCA can be considered the source of today’s “infrastructure coordination”, but for one reason or another they were never successful. At the time, one of the factors limiting the development of automated driving was that “the computers were too big to ride in the car”.

However, as described by Moore’s Law, the situation changed gradually. Dr. Gordon Moore, now chairman emeritus of Intel, said in 1965, “The computing power per unit of CPU doubles every 18 months.” According to the law, when the CPU began its evolution, its processing capacity is thought to have doubled by the middle of 1966 and quadrupled by 1968. Initially, this evolutionary speed did not seem of major importance, but the evolution has accelerated over time. In other words, 30 years later in 1995, for example, the absolute value had increased considerably by “2 to the 20th power = 1,048,576” times.

Right at that time in Europe, the development of autonomous driving was about to enter a new era. Between 1987 and 1995, the European Commission invested a massive billion dollars to promote an automated driving development project called Eureka PROMETHEUS. An autonomous driving prototype (based on the Mercedes S-class) developed as part of the project completed the more than 1600-km autobahn round trip between Munich and Copenhagen with a maximum distance between human interventions of 175 km. During the drive, human intervention only accounted for 9 km, while the rest of the journey was all controlled by computers installed in the car. In this way, the “autonomous” form of automated driving that is mainstream today was developed for the first time.

Soon afterwards, in 2000, there was a big shift in the United States. Congress decided that “by 2015, a third of all vehicles deployed to the front lines in war will be driven automatically.” In response to this, DARPA (Defense Advanced Research Projects Agency) started a robot car race called the DARPA Grand Challenge in 2004. Many universities, companies, and research institutions took part in the race, which offered $1 million in prize money. As a result, the top prize-winning engineers led the way in developing autonomous driving in the United States.

Eventually, in May 2012 in Nevada, Google began the first demonstration tests of self-driving cars on public roads. Many readers will remember the photos of a Prius with a large cylindrical LiDAR mounted on the roof. To be honest, that was also the first time the author became aware of autonomous driving as a reality. Sebastian Thrun, a German engineer with Google who developed this self-driving Prius, was the winner of the 2005 DARPA Grand Challenge (as a member of the Stanford University team). This has subsequently developed into a fierce competition aimed at implementing autonomous driving, and with governments themselves holding the reins, various players such as automobile OEMs, suppliers, startups, and university research institutes are competing head-to-head daily.

Autonomous driving: the technology

For details on the level regulations for autonomous driving, please refer to “Safety Guidelines for Self-Driving Vehicles“(Japanese only) published by the Ministry of Land, Infrastructure, Transport and Tourism in September last year. Here, I would like to explain some of the technical matters related to autonomous driving, focusing on the cool concept of ODD.

ODD (Operational Design Domain) is defined as “specific conditions related to a designed driving environment that is the premise for the normal operation of an automated driving system”, referring to conditions such as the road, geography, weather, time of day, and speed. The more conditional settings there are, the easier it is to achieve automated driving. Conversely, the fewer the conditions, the more difficult it will be. In other words, automated driving technology needs to be considered in a matrix combined with ODD rather than being simply organized by “levels” alone.

Such conditions can be configured relatively easily if, for example, it is a vehicle for a mobile service and we can assume that it’s like a railway that runs only on the tracks. However, with a car privately owned by an individual on the other hand, the greater the number of conditions required to establish a function, the less commercial value the function has. In other words, autonomous driving will evolve along different routes in the two segments of “Logistics/Mobile Service Vehicles” and “Personal Cars” (see the figure below from the Cabinet Office SIP (Strategic Innovation Promotion Program) document(P9, Japanese only) on automated driving systems).

Audi has introduced the traffic jam pilot, a “Level 3 automated driving function that allows driving on expressways at speeds of 60 km/h or less”, in its current A8 model. (Note: as explained below, due to regulatory issues, it has not yet been commercialized). In other words, the “Speed of 60 km/h or less, expressway” condition here is the ODD for level 3 driving. But why do we need to configure such conditions? One reason is said to be to do with sensors.

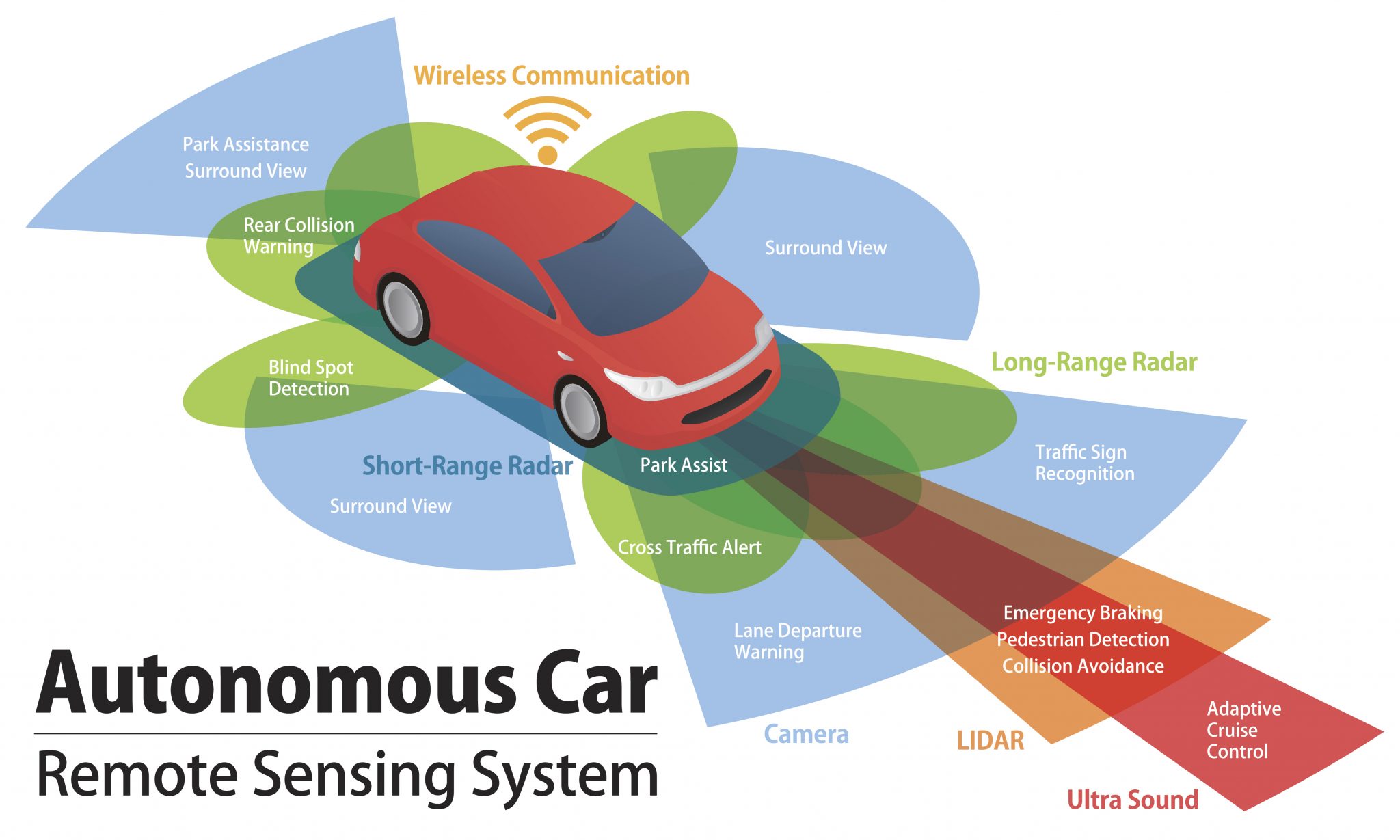

Autonomous driving consists of three stages: “cognition”, “judgment” and “operation”. Of the three, “cognition” refers to sensor technology, which can be described as the foundation of the three elements. In other words, without cognition, the latter two cannot be achieved. However, how much can be recognized depends on the sensor technology. Even now, cars are fitted with various sensors, but their roles are divided according to their sensory range. As a result, there are various types of sensor such as cameras, LiDAR (laser scanners), millimeter wave radar, and sound wave detection (sonar), of which LiDAR is used to ensure long-range forward visibility.

In the case of Level 3 automated driving, principal control of the driving is transferred from the system to a human driver when “it is difficult to continue operation (of the automatic driving system).” This transfer of principal control, also known as transition, override, or takeover, is said to take about six seconds (+ α). In other words, this requires the automated driving system to know that something is likely to happen six seconds in advance ahead and decide to give override to the driver. In order to do that, it needs to recognize the environment in front of it while driving. A vehicle driving at 60km/h travels 100 meters in six seconds, so it needs to recognize the situation 100 meters ahead. (By the way, this recognition is not simply “there is an object”, but whether it is a person or a tire, for example. So it is accompanied by image recognition that can distinguish between objects, for which purpose AI is also used). In other words, as a result of cognitive ability “up to 100m ahead”, an ODD of “60 km/h or less = traffic jam” was configured. Therefore, if, for argument’s sake, the speed is 120 km/h and you want to demonstrate Level 3 functions – performing actions other than driving, such as reading emails, for example – it requires a sensor that can recognize up to 200 meters away. However, the limit that LiDAR can detect with current sensing technology is said to be 200m, and it is not suitable for installation in a private car. This is because although LiDAR can deliver the most advanced performance, it is very large and extremely expensive, so there are a very limited number of people who want to pay out of their own pocket for an expensive car with that kind of appearance. If LiDAR becomes smaller and cheaper in the future, or if more practical long-distance recognition technology comes into practical use, ODD will become more widespread. In this way, the ODD is configurd taking into account technical requirements (including cost).

By the way, in the above case, the “detectable in advance” detection range is limited to six seconds, but there is an option to widen the prediction range and make it possible to read further ahead in order to broaden the ODD. In addition, prediction is an issue not only at Level 3, but also at Level 4. First of all, data collected from various sensors related to cognition is combined into one “situation recognition” (sensor fusion). AI is also used here, for which sensor data from various situations is captured and machine learning is performed. In addition to actual data, virtual simulations are included to reproduce all possible situations. In other words, it requires advanced computing technology and a larger-size computer, which leads to the problems of what to do about storage space, cost, and the electric power needed to operate it.

In addition, in order to operate the system stably, there is the problem of “ensuring redundancy”. Redundancy is originally an IT term which means, “operating with a spare system normally deployed as a backup in order that, in case of damage to one part of the system, the functioning of the entire system can continue to be maintained.” In other words, a backup system must be taken into account.

Also, for Level 3, what happens if an accident occurs because a human driver cannot manage a critical situation as a result of, for example, a 6-second delay? According to the rules, it is the responsibility of the human driver, but “would anyone really buy such a dangerous system?” As a result, commercialization of Level 3 requires equipping the vehicle with the Minimum Risk Maneuver to guarantee the safety of the driver and passengers even in the worst case scenario.

As described above, ODD is configured as a result of the multiplication of various elements, and a practical automated driving system has been developed within those configuration requirements. For example, Level 4 is generally expected to be achieved in the mid-2020s. Alphabet has already started a Level 4 mobile service in Phoenix, as will Baidu in China this year, while GM, Ford, FCA, etc. have announced plans to start operation in 2021, Daimler in the first half of the 2020s, Nissan by 2022, and Toyota by 2026, respectively. But here too, not only will “Level 4” be very significant, but so too will the “ODD configuration content” upon which it is premised.

(To be continued)